Independent events

We say two events are independent if .

Events are said to be independent if and any subcollection of containing at least two but fewer than events to be mutually independent.

Note that if are pairwise independent, they need not be independent as a collection. For example, let and let the probability function assign a probability of for each element in . Consider the events , , and . , . Thus, the s are pairwise independent. But, .

The notion of independence is frequently used to construct probability spaces corresponding to repetitions of the same experiment, where the outcome of each iteration of the experiment is not influenced by the results of the other iterations. Let be a discrete or finite probability space modeling the th iteration of the experiment. The probability space for iterations of the experiment can be constructed like so: , where is defined on the elementary events like so: (it is easy to verify that this definition satisfies the properties of a probability measure). Note that the event occurring in the th iteration would correspond to the event in the new probability space. As we would expect, this construction makes events that belong entirely to different iterations independent.

For example, let the experiment we wish to repeat be a coin toss. The probability space modeling a single coin toss is . The probability space modeling iterations of the experiment would have a sample space of -tuples consisting of s and s. The probability of an elementary event would be computed like so:

Going a little further, it is evident that all elementary events which produce the same total number of s have the same probability. Let’s associate with every elementary event in a number which counts the number of s that appear in the event. is what is called a discrete random variable. One can say that the probability of getting s is

Discrete random variables

Definition 1.

A discrete real valued random variable on a probability space is a function , such that for all .

Important

All random variables/vectors we will deal with before the midsem are going to be discrete ones.

is usually shortened to . In the previous example, would be the event corresponding to getting ones.

Note that if is a random variable on and is any function, then is also a random variable, since .

Discrete density functions

Definition 2.

The real valued function defined by is called the discrete density function or discrete mass function of . A number is called a possible value of if .

Properties of the probability mass function that should be obvious:

- for all , and for at most countably many .

- .

Also, for any function satisfying the above properties, there exists a probability space and a random variable with mass function (take the trivial example to show its existence). This result assures us that statements like “Let be a random variable with discrete density ” always make sense, even if we do not specify directly a probability space upon which is defined.

Binomial distribution

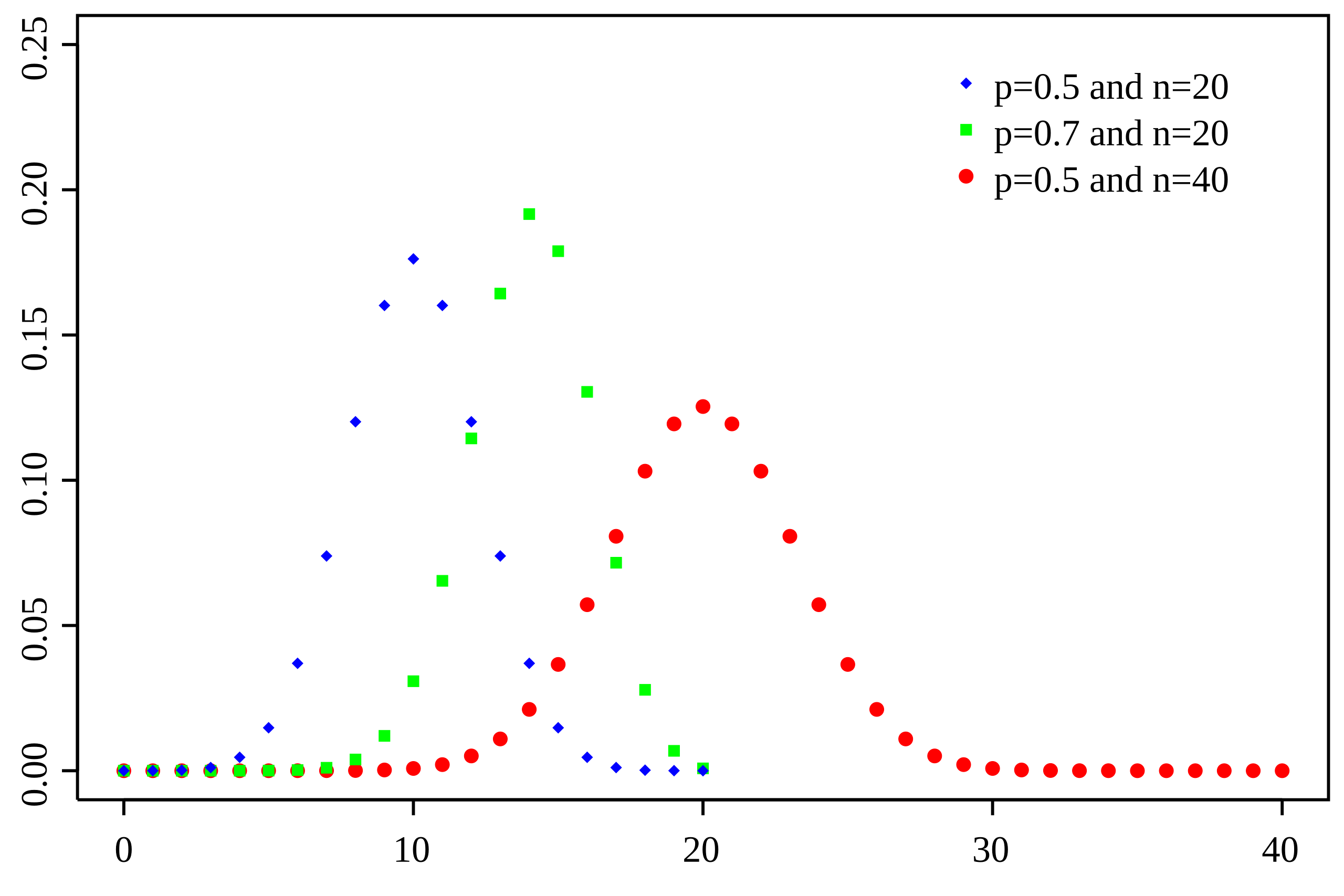

Consider independent repetitions of a simple success-failure experiment, like the coin tossing one discussed above. Let denote the number of successes in trials. Then, is a random variable that can only assume the values . The probability density for such an experiment is called the binomial density.

The outcome of performing Bernoulli trials with fixed parameter can be given by the random vector , with and signaling success and failure in the th trial respectively. We know that the random variable is binomially distributed with parameters and , as shown above. Turning this around, we can say that any random variable that is binomially distributed with these same parameters can be thought of as the sum of independent Bernoulli random variables each having parameter .

The distribution function

What follows is valid for all probability spaces.

Definition 3.

If is a random variable on , define its distribution function by .

Theorem 4(Proposition).

- is non decreasing.

- for all (right continuity).

The fourth point is shown by considering any monotone decreasing sequence converging to , and observing that

where these properties have been used. A closely related result is :

It follows that . This will be important when we discuss continuous random variables.

Also, any function satisfying the four properties in the proposition above is called a distribution function.